Motivation

Before now, me as a undergraduate student, I was parsimony who usually depend on colab, kaggle, friend’s server(occasional) whenever i need GPU..

And this time it’s been for a while to install GPU than I expected and I share the several component that stood in my way.

Written at Oct 24 2019, if you think this is deprecated, please do not have a leap of faith.

Just for the record, I’ve used Kaggle, Colab, GCP, Azure, EC2 as GPU cloud.

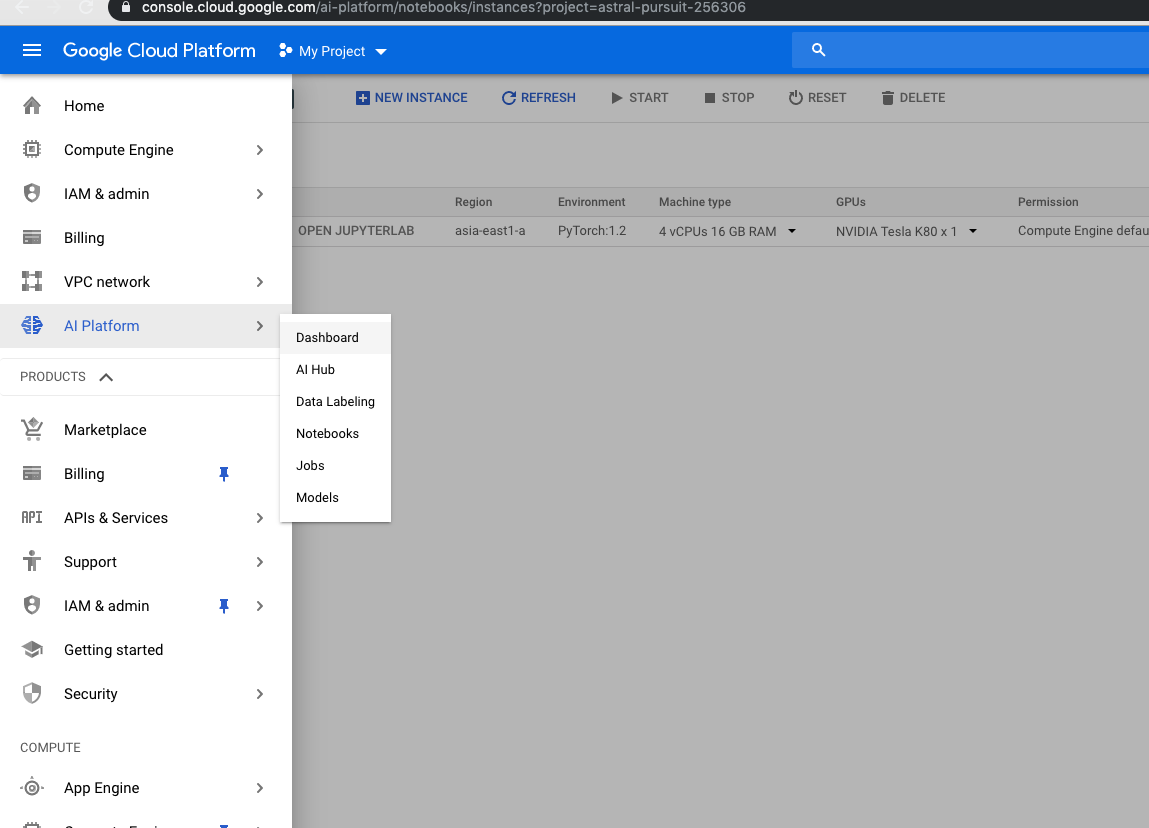

1. Did not know there is JupyterLab option in Google Cloud Platform.

At the first time when GCP came out, there was no AI Platform service. So from starting vm instance to launching jupyter and installing packages, I did all of the things myself. (and I learned 🤗)

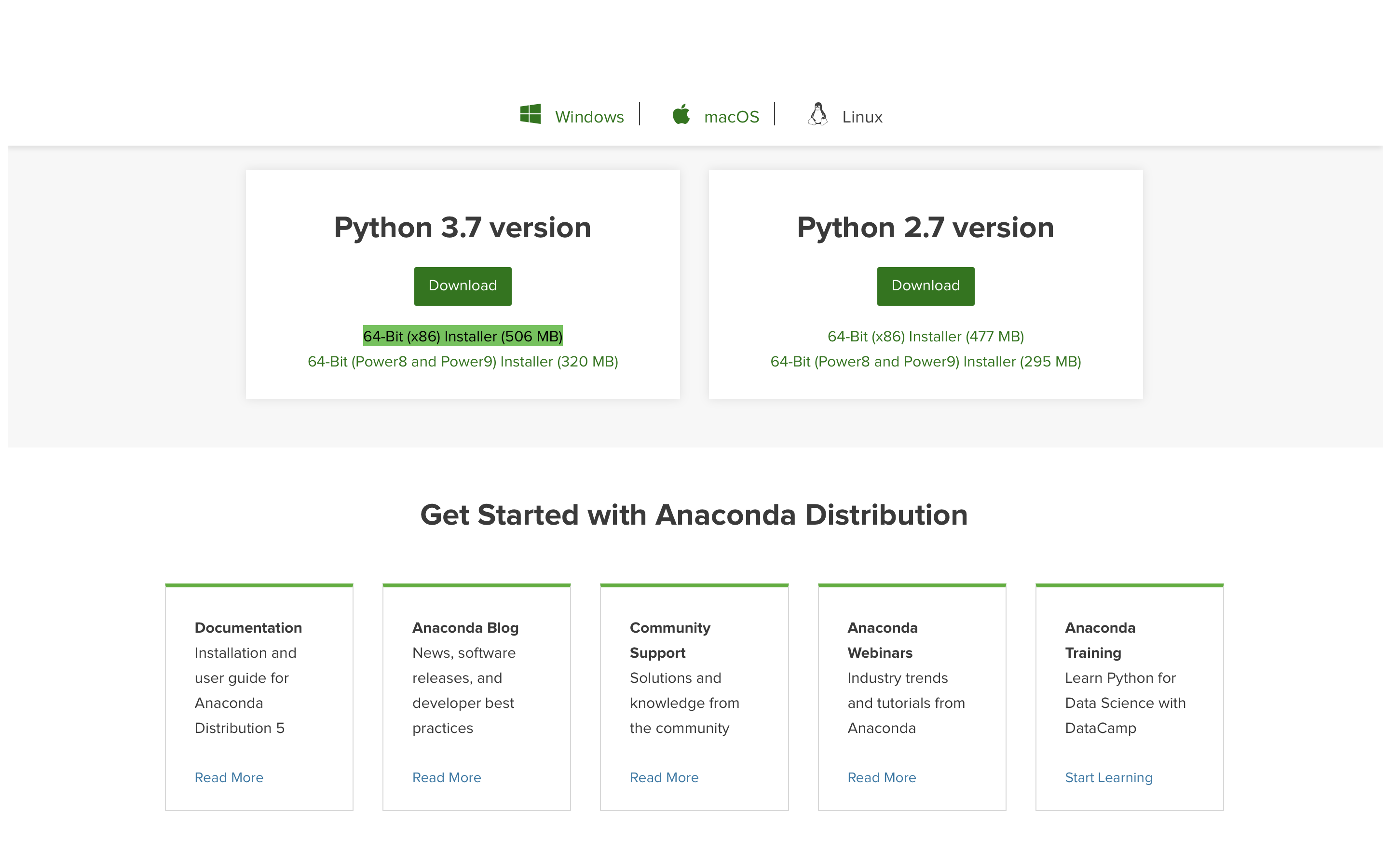

$ curl -O https://repo.continuum.io/archive/Anaconda3-5.0.1-Linux-x86_64.sh

[Downloading conda in ssh]

I created VM instance,selected zone, machine type and disk type. Then, define firewall rules and in ssh terminal, install jupyter and other packages.

But you can do all of these things just using AI Platform.

[AI Platform]

I think it especially save your time if you are living in Asia-Pacific, which google doesn’t support not that much GPU resources.

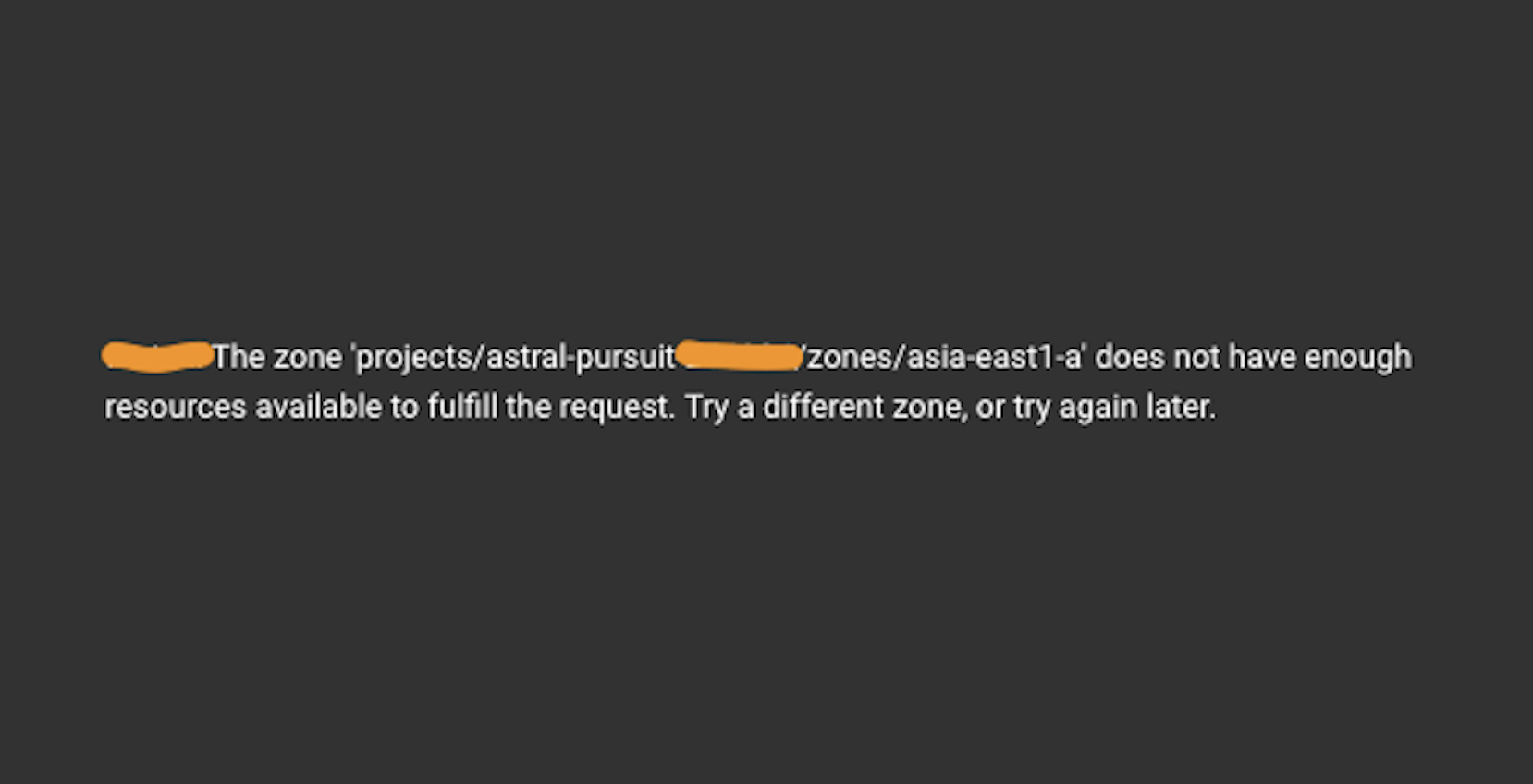

2. Consider if the platform has limited resources in a region you live in.

I live in South Korea, East Asia, and it seems like this region has lots of limitation in GPU (except quite expensive AWS)

And the Taiwan which was the only one region where I can launch my own VM with GPU (I tried all the other regions in the list) sometimes do normaly, but not always. 😥

After launching, I did several works and next day I could not start VM. (I didn’t count it, but tried it a few hours because I didn’t want cost any more time…)

Endlessly failed to start instance, then I choose to move AWS as an alternative way.

3. Fast.ai gives deliberate guide and I didn’t know it.

Fast.ai offer the guide for all available platform. (Colab, salamander, Gradient, Kaggle, Colab, and so on)

It is so important, and really needs, because cloud computing options are vary as occasion and purpose arise.

I didn’t know fast.ai has manual to running GCP, and I think it’s as good a reason as any for me to be have taken time.

It helped me so much when I had aws and shortened my time.

I don’t want to read all of the manual in amazno.. (It is recommended.. but I’d rather read GIT PRO now…)

ssh -i ~/.ssh/<your_private_key_pair> -L localhost:8888:localhost:8888 ubuntu@<your instance IP>

4. You should wait to add more volume just after add volume, by building AWS EC2.

Since Elastic Block Store(EBS) storage supports optimized storage, users can’t extend storage volume two times in a row.

Unfortunately, at the first time, I didn’t know it (again 👻) and when VM lacked volume, I doubled dist capacity (76*2) at a rough but It needs more.

<!–

this time I installed GPU in two years, and it became little complicated compared to 2 years ago. And this time for the first time(maybe not the first time.. but i handled it in my class or with my friend. but it’s my first time on my own.) I very I’m started to using used google colab, kaggle and, GCP-JupyterLab, ec2 - friend made, aws vm machine but I had a environment variable but i did not know of it. On these days, I could not get a resources from taiwan…

-

I couldn’t notice a deliberate

-

Anyway, as a result I tried myself gcp myself and aws ec2 with fast.ai But I think doing on my self surely takes much time (in this point I wonder why I’m doing this, and should remind me, especially I was studying disk volume optimization)

-

disk volume exceed - https://askubuntu.com/questions/919748/no-space-left-on-device-even-though-there-is

Digital Product School week 4

Digital Product School week 4