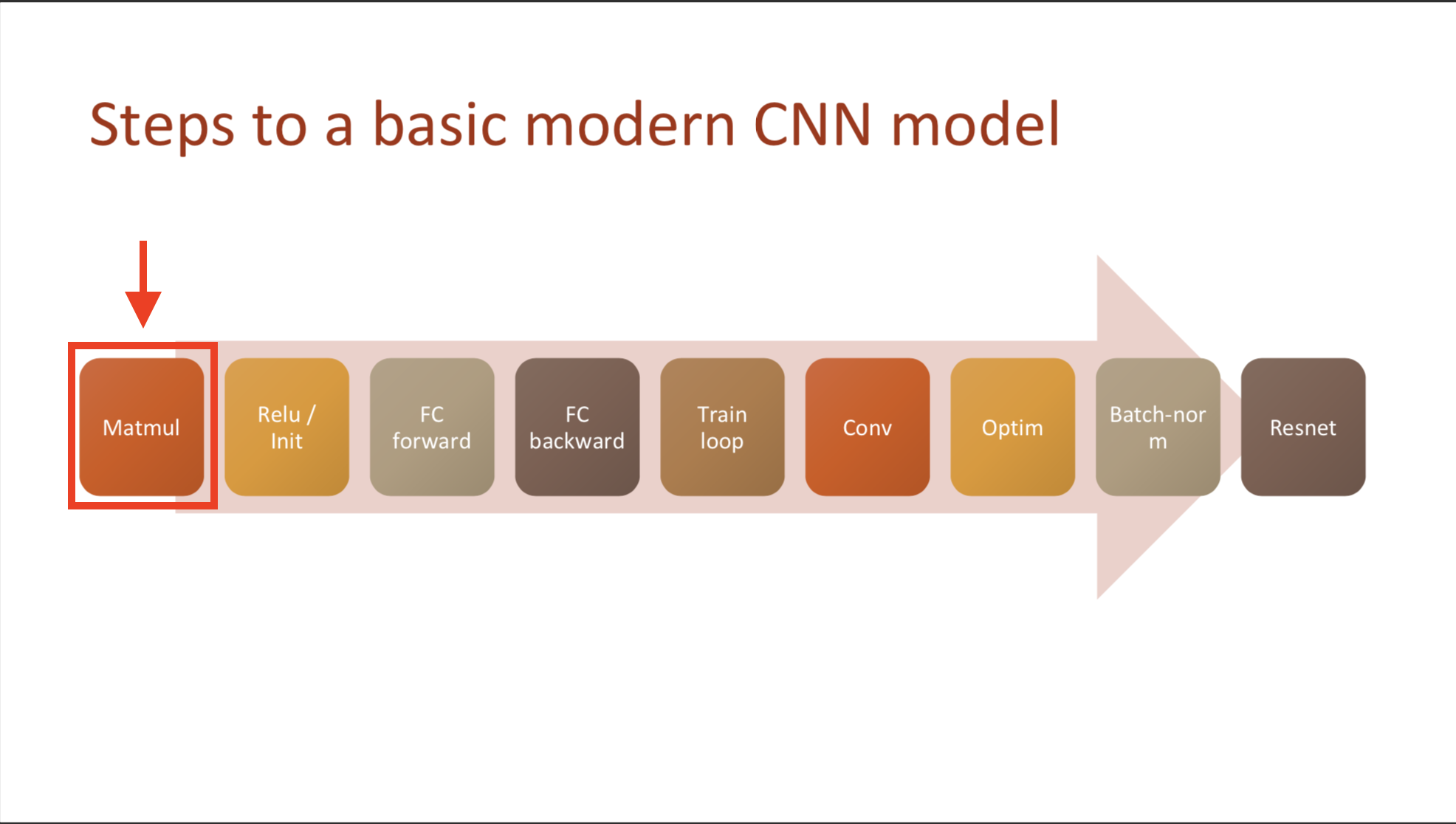

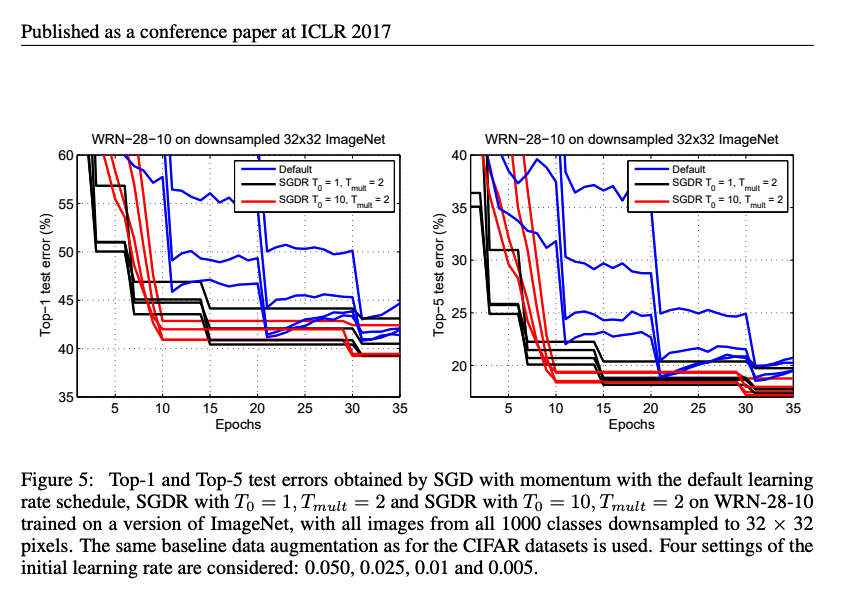

Image reference: https://arxiv.org/pdf/1608.03983v5.pdf

🎮 Q0: Preprare data, dataset, databunch, learner, and run objects.

🎮 Q1: implement two new callbacks

1) Recorder: records scheduled learning rate & plot

- hint. consider which points you should intercept to record learning rate and loss

2) ParamScheduler: schedule any hyperparameter (as long as it’s registered in the state_dict of optimizer)

- hint. think of when you can apply tweaked hyper parameters

🎮 Q2: implement annealer which does schedule learning rate given specific period of training.

- Linear, Cosine, Exponential

🎮 Q3: plot above annealers start from 2 end with 0.01 (you’d need monkey patch code)

- (1) plot image on seperate images

- (2) plot graphs on one image 🎮 Q4: implement combine scheduler which re-scales schedulers with assigned percentaghes.

A0

A1

class Recorder(Callback):

def begin_fit(self): self.lrs,self.losses = [],[]

def after_batch(self):

if not self.in_train: return

self.lrs.append(self.opt.param_groups[-1]['lr'])

self.losses.append(self.loss.detach().cpu())

def plot_lr (self): plt.plot(self.lrs)

def plot_loss(self): plt.plot(self.losses)

class ParamScheduler(Callback):

_order=1

def __init__(self, pname, sched_func): self.pname,self.sched_func = pname,sched_func

def set_param(self):

for pg in self.opt.param_groups:

pg[self.pname] = self.sched_func(self.n_epochs/self.epochs)

def begin_batch(self):

if self.in_train: self.set_param()

A2

# annealer decorator

def annealer(f):

def _inner(start, end): return partial(f, start, end)

return _inner

# schedulers

@annealer

def sched_lin(start, end, pos): return start + pos*(end-start)

@annealer

def sched_cos(start, end, pos): return start + (1 + math.cos(math.pi*(1-pos))) * (end-start) / 2

@annealer

def sched_no(start, end, pos): return start

@annealer

def sched_exp(start, end, pos): return start * (end/start) ** pos

A3

annealings = "NO LINEAR COS EXP".split()

a = torch.arange(0, 100)

p = torch.linspace(0.01,1,100)

fns = [sched_no, sched_lin, sched_cos, sched_exp]

for fn, t in zip(fns, annealings):

f = fn(2, 1e-2)

plt.plot(a, [f(o) for o in p], label=t)

plt.legend();

A4

def combine_scheds(pcts, scheds):

assert sum(pcts) == 1.

pcts = tensor([0] + listify(pcts))

assert torch.all(pcts >= 0)

pcts = torch.cumsum(pcts, 0)

def _inner(pos):

idx = (pos >= pcts).nonzero().max()

if idx == 2: idx = 1

actual_pos = (pos-pcts[idx]) / (pcts[idx+1]-pcts[idx])

return scheds[idx](actual_pos)

return _inner

sched = combine_scheds([0.3, 0.7], [sched_cos(0.3, 0.6), sched_cos(0.6, 0.2)])

cbfs = [Recorder,

partial(AvgStatsCallback,accuracy),

partial(ParamScheduler, 'lr', sched)]

learn = create_learner(get_model_func(0.3), loss_func, data)

run = Runner(cb_funcs=cbfs)

Questions

Why this model has only one parameter bunch? (i.e. self.opt.params.group[-1] -> why picks up last element?)

Sylvain Gugger at a joint NYC AI & ML x NYC PyTorch on 03/20/2019

Sylvain Gugger at a joint NYC AI & ML x NYC PyTorch on 03/20/2019