Lecture 5: Dependency Parsing

Table of contents

Contents

“We will focus more on how human language is made and used”

1) Consistency and Dependency

1) Phrase Structure [Grammar]

organizes words into nested constitutes

- Phrase structure examples

- NP -> DET + (ADJ) + N + PP

- PP -> Prep + NP

- Different languages have different phrase structure.

2) Dependency Structure

: which words depend on(modify / arguments) which other words

Note: Having study with TWIML community, folks wondered why this is ‘Context free’ 1

Why this syntactic structure is important?

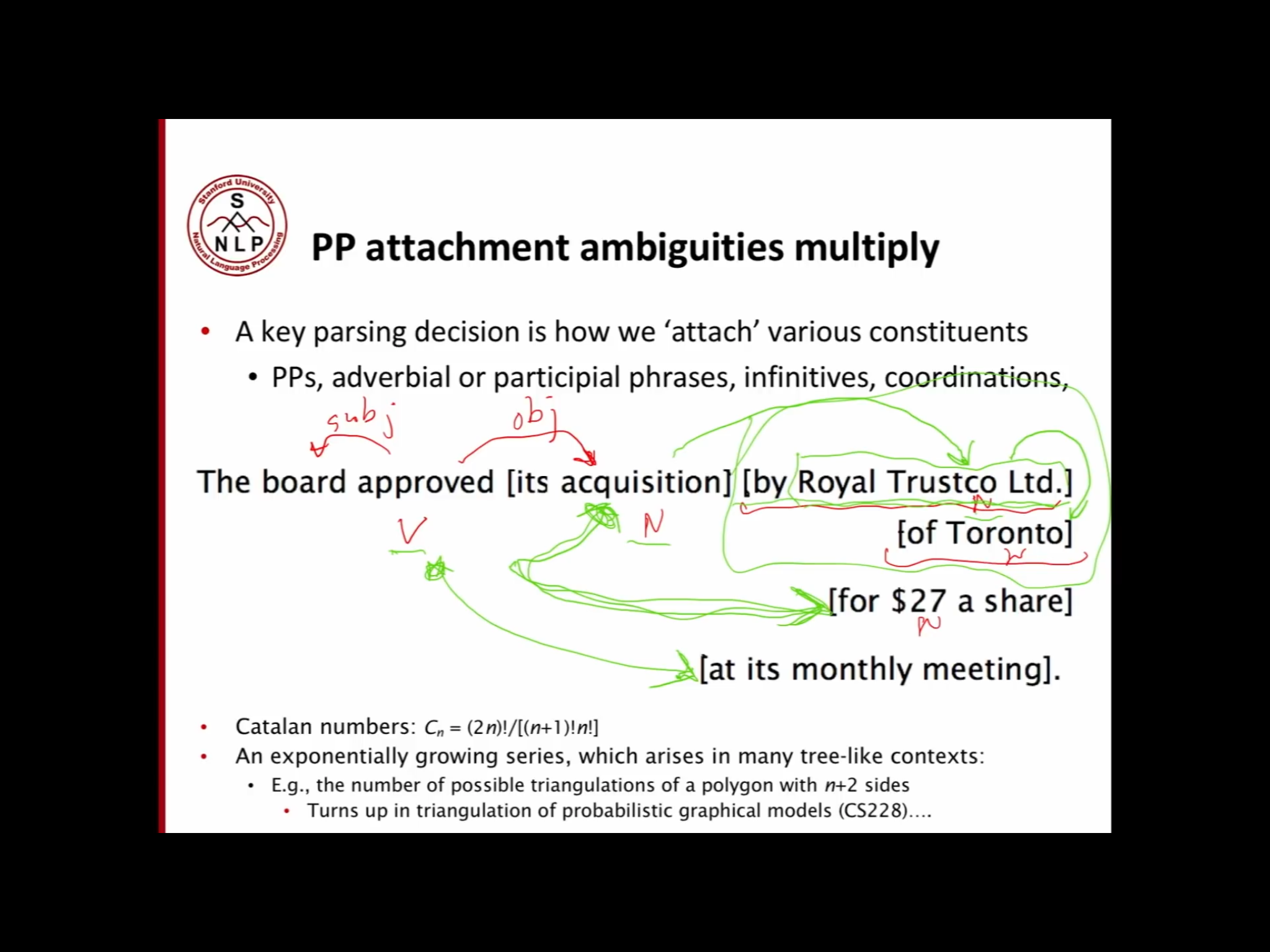

1) Prepositional phrase attachment ambiguity

San Jose cops kill man with knife

with knifemodifiesmanwith knifemodifiesSan Jose cops kill man

The board approved [its acquisition] [by Royal Trustco Ltd] [of Toronto] [for $27 a share] [at its monthly meeting].

Catalan numbers \(C_n = \frac{2n!}{(n+1)!n!}\)

- An Exponentially growing series, which arises in many tree-like contexts:

- e.g., the number of possible triangulations of a polygon with n+2 sides

- turns up in triangulation of probabilistic graphical models(cs228)…

2) Coordination scope ambiguity

Doctor: No heart, cognitive issues

- No [heart and/or cognitive] issues

- [No heart] and [cognitive issues]

3) Adjectival Modifier Ambiguity

Students get first hand job experience

- Students get [[first hand] [job] experience]

- Students get [[first [hand job] experience]

4) Verb Phrase (VP) attachment ambiguity

Mutilated body washes up on Rio beach to be used for Olympics beach volleyball

- Mutilated body washes up on [Rio [beach to be used for Olympics beach volleyball]]

- Mutilated body [washes up on Rio beach] to be used for Olympics beach volleyball

2) Dependency Grammar

Two ways of representing the dependency structure of sentence

- in a line and drawing arrows

- tree structure

Generally, people type arrows with the name of grammatical relationships, but in this case we will not go that far ahead.

Dependency tree is acyclic, single-head, connected.

- Dependency Grammar/Parsing history

2) Treebanks

Universal Dependencies, cf (#todo fill out)

People annotated structure dependencies heuristically (And this project handles with many languages other than English)

Dependency Conditioning Preferences (some dependency rules)

- Only one word is a dependent of ROOT

- Don’t make it cyclic.

- in little case, bootstrapping happens(when parse becomes non-projective)

- will comment further at next class

3) Arc-standard transition-based parser

Shift Buffer’s element to left Stack, until stack finds Head component.

-> When you find head at stack, start to reduce

-> but notice not to reduce head to root until buffer has no element.

- Left Arc means reduction of left argument, and keep head

- Right Arc means reduction of right argument, and keep head.

- Finish condition is when buffer is empty, and stack has only one element, which is

[root]

But the problem of this method is how we choose the next step is uncertain.

Usually these problem was handled using Dynamic programming to avoid too much selection cases.

4) Neural dependency parsing

1) MaltParser

[Nivre and Hall 2005]

built ML classifier,

\[Accuracy = \frac{\#\ correct\ deps}{\#\ of\ deps}\]UAS = 4 / 5 = 80% (exclude label)

LAS = 2 / 5 = 40% (include label)

2) Conventional Feature Representation was very complex.

- incomplete

- expensive computation (critical problem)

- sparse

3) Neural dependency parsers

[Chen and Manning 2014]

sent. / smeans number of sentences that algorithm can perform.- Not only fastest methods, but also accuracy gets almost highest.

- the dense representation is the key

- Used treebanks

4) Further researches

done by Google

- Using bigger, deeper networks, tuned hyperparameters

- Beam search

- Global inference, CRF over the decision sequence

-

D. Jurafsky and J. H. Martin, Speech and Language Processing, 2/e, Prentice Hall, 2008. ↩

CS224N: NLP with Deep Learning | Winter2019 | Lecture2

CS224N: NLP with Deep Learning | Winter2019 | Lecture2