Since fastai instructor people highly emphasized to copy the code from scratch, I’ve been using that approach.

It was fine with Part1, but from Part2, applying same strategy which was trying to copy nbs from blank cell without peeking up original was not enough.

First, there were lots of sub-topics worth to dig in, since we’ve moved to bottom-up phase. And I couldn’t inspect deep inside of each topic(sometimes even don’t know what I was doing) only to replicating the code. It would be okay with part1 session, but seemed insufficient with part2

Second, literally it was too difficult to rely on my own memory. I should have open and close up the Jeremy’s too many times.

So, I thought it would be great if I could draw overall picture of each notebook, making replicating process easier. And it was. (At least with 01_matmul, 02_fully_connected, 02b_initializing, 03_minibatch_training)

So I’d like to share my own supplementary, hoping it could help you to replicate code much easily and (hopefully) grasp the lesson that Jeremy intended.

Original notebook link

My replicated Notebook Link

Initial Setup

-

Fastai for Colab env

- Hyper parames

- Number of output unit = value max

- Number of hidden unit = 50

- Number of hidden size = 1

- Number of batch size = 64

-

loss function - cross entropy 1

- train_ds, valid_ds - instance from

Dataset()class

DataBunch / Learner

To make our model polymorphic, we will replace the arguments of fit function.

fit(epochs, model, loss_fun, opt, train_dl, valid_dl) -> fit(const:epoch, learner) 2

- Make

Databunchclass- instance initializes with train/valid dataloader and label

- default label as None

- has methods which can get dataset property

- make data instance

- instance initializes with train/valid dataloader and label

- re-define

get_modelfunction- params - databunch, learning rate, hidden layer nods

- retur model and optimizer using arguments

- make class

Learner- instance initializes with model, optimizer, loss function, data bunch instance

- re-define fit function

- parameters - epochs, learner object

- now it trains using uses learner’s attribute to access data/parameters

- return avg loss, acc (* suppose batch size is not variant)

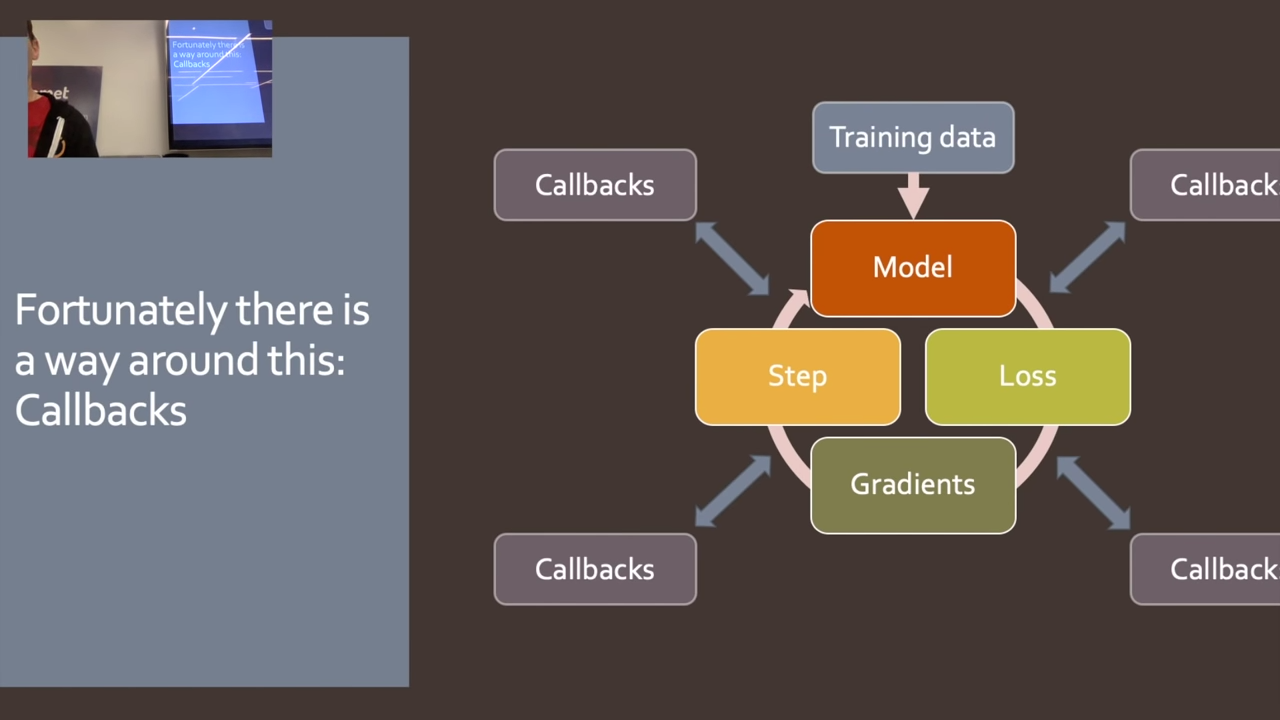

CallbackHandler

(Issue: I’m still trying to grasp overall content, so the contents would be in mess)

* Not satisfying if not is normal. (i.e. satisfying if not condition means something bad happened) 3

Basic

fit(1, learn, cb = CallbackHandler([TestCallback()]))

- fn

one_batch- begin batch, after loss, after backward, after step

- fn

all_batches- do_stop

- fn

fit- begin fit, begin epoch, begin validate, ~ do stop & after epoch, after fit

class Callback()- just as mentioned above except do_stop

- return input / boolean (I think specifics are not yet implemented) after saving inputs

class CallbackHandler()- init - callback, type: list

- directly called at training process

- Does not save inputs 4

class TestCallback(Callback)- inherit callback, but interrupt targets, i.e.

iteration.

- inherit callback, but interrupt targets, i.e.

Runner

we will split callback class to clases composed of functions it had.

- make function which changes camel to snake

- Callback

- set_runner, get attr, change attribute of instance which is name using 1

TrainEvalCallback: switch the model in train/valid, count iterations, iteration statusTestCallback: run just for 10 iterations

3,4 class inherit Callback, and see the changed name

- listify function which makes inputs to type list

- Runner class

- get additional callbacks and set them as attributes, merging with TrainEvalCallback

- set opt model loss_fun, data method and make those available to be used as property

one_batch,all_batches,fitfunctions- dunder call that checks the availability of callback

AvgStatsCallback- make

AvgStatsclass which save, track and calculate the loss and metrics. - begin epoch, after loss, after epoch - reset, get value, print out the result stats

- make

- see the results with stats callback

- see the results with stats callback function

Part2 lesson 9, 03_minibatch_training | fastai 2019 course -v3

Part2 lesson 9, 03_minibatch_training | fastai 2019 course -v3