This session is highly related to what is torch.nn really? by Jeremy Howard.

Q1. Implement basic form of neural network model which recursively compose previous layers, and gives back prediction.

Q2. Implement log_softmax and cross_entropy functions, and calculate loss. How are both functions related to?

Q3. refactor log_softmax using LogSumExp trick. Prove customized version is identical to pytorch’s

Q4. Implement accuracy function and see the result with one small batch of training data and initialized model. After that, do the mini-batch learning with minimal configurations of dl, i.e., forward/loss/backward/optimize. Here you don’t have to validate model.

Q5. This time, use nn.Module.__setattr__ in your customized Model so that you can access model parameter in training. Compare fit (training process) from the previous one. How has it been approved?

Q6. Let’s crack how pytorch overrides setattr method. This time implement customized module, DummyModule, without inheriting nn.Module. Test customized module with model.

Q7. Now suppose we don’t know how much layers we will get, which means you have to leave layers as parameters.(Not attribute inside of model, like l1, l2…etc) Implement your model in three different ways.

- You can register module one by one

- or you can give list of layers to nn.ModuleList

- finally, nn.Sequential does 1) get module list and register it to parameter 2) compose layers

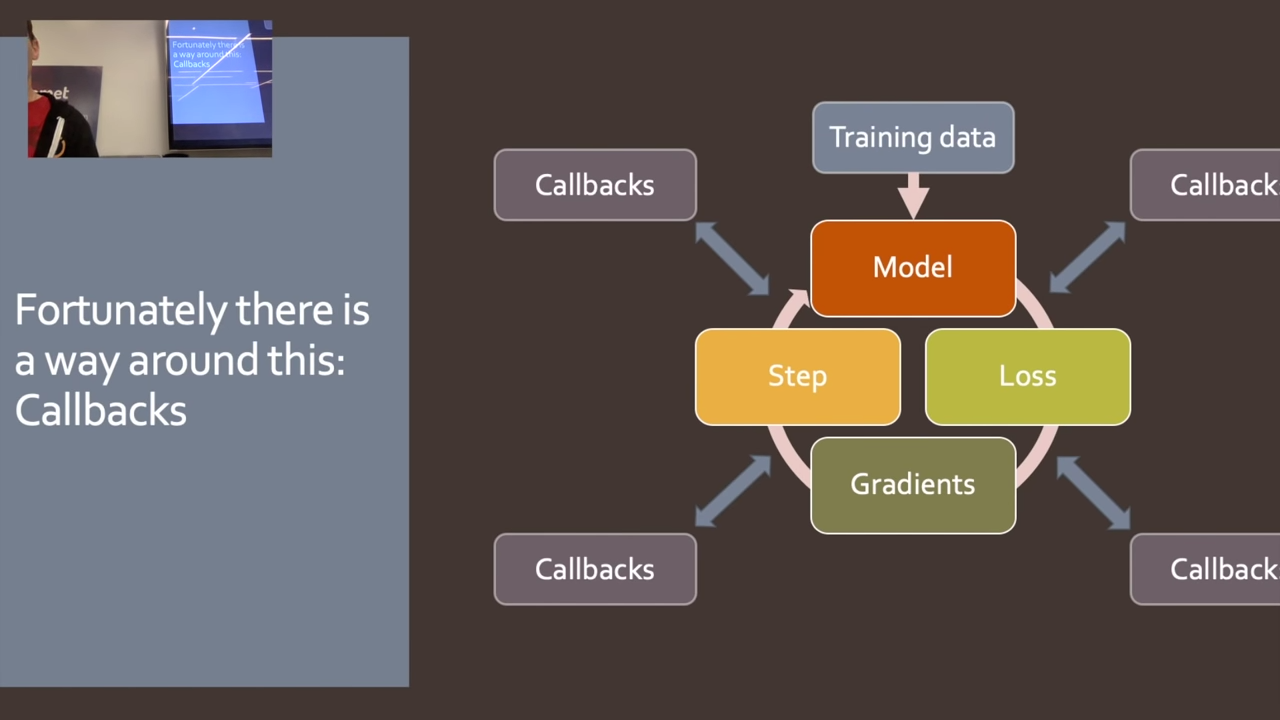

Summary: By far, from the basic training loop, we handed over 1) checking if layer has parameters and 2) compositional function of arbitrary number of layer to pytorch.nn.

- Update parameters and make zero of remain gradients.

Q8. make (sgd) Optimizer class which has method of updating parameter and emptying gradients. Test optimizer optimizer by training model.

- Hint: nn.Sequential.parameters() is generator.

Q9. This time, use pytorch’s module

Q10. ImplementDatasetwhich combines x and y data. Train using that dataset.

Q11. ImplementDataLoaderso that your loop looks much clearner. Train using that dataloader. Q12. Now implementSamplerwhich makes training set to be in a random order, and that order should differ each iteration. Combine it withcollatefunction so that you can randomized xb, yb separately. Q13. Use pytorch’sDataloaderA1. ```python3 n,m = x_train.shape c = y_train.max()+1 nh = 50

class Model(nn.Module): def init(self, n_in, nh, n_out): super().init() self.layers = [nn.Linear(n_in,nh), nn.ReLU(), nn.Linear(nh,n_out)] def call(self, x): for l in self.layers: x = l(x) return x

pred = Model(m, nh, 10)(x_train)

A2. negative log likelihood represents cross entropy as `x` is one-hot encoded vector, where log softmax is translated to likelihood.

```python3

def log_softmax(x): return (x.exp()/(x.exp().sum(-1,keepdim=True))).log()

def log_softmax(x): return x - x.exp().sum(-1,keepdim=True).log()

def nll(input, target): return -input[range(target.shape[0]), target].mean()

nll(log_softmax(pred), y_train)

A3.

def logsumexp(x):

m = x.max(-1)[0]

return m + (x-m[:,None]).exp().sum(-1).log()

test_near(logsumexp(pred), pred.logsumexp(-1))

def log_softmax(x): return x - x.logsumexp(-1,keepdim=True)

test_near(nll(log_softmax(pred), y_train), loss)

test_near(F.nll_loss(F.log_softmax(pred, -1), y_train), loss)

test_near(F.cross_entropy(pred, y_train), loss)

A4.

# see initialized model's performance

def accuracy(out, yb): return (torch.argmax(out, dim=1)==yb).float().mean()

bs=64 # batch size

xb = x_train[0:bs] # a mini-batch from x

preds = model(xb) # predictions

accuracy(preds, yb)

# naive minibatch training

lr, epochs = 0.5, 1

for epoch in range(epochs):

for i in range((n-1)//bs + 1):

start_i = i*bs

end_i = start_i+bs

xb = x_train[start_i:end_i]

yb = y_train[start_i:end_i]

loss = loss_func(model(xb), yb)

loss.backward()

with torch.no_grad():

for l in model.layers:

if hasattr(l, 'weight'):

l.weight -= l.weight.grad * lr

l.bias -= l.bias.grad * lr

l.weight.grad.zero_()

l.bias .grad.zero_()

accuracy(model(xb), yb)

A5.

class Model(nn.Module):

def __init__(self, n_in, nh, n_out):

super().__init__()

self.l1 = nn.Linear(n_in,nh)

self.l2 = nn.Linear(nh,n_out)

def __call__(self, x): return self.l2(F.relu(self.l1(x)))

model = Model(m, nh, 10)

def fit():

for epoch in range(epochs):

for i in range((n-1)//bs + 1):

start_i = i*bs

end_i = start_i+bs

xb = x_train[start_i:end_i]

yb = y_train[start_i:end_i]

loss = loss_func(model(xb), yb)

loss.backward()

with torch.no_grad():

for p in model.parameters(): p -= p.grad * lr

model.zero_grad()

- No need to check whether or not model layer has ‘weight’/parameter attribute, since pytorch.nn.Module handels by itself with overriding

__setattr__function.

A6.

class DummyModule():

def __init__(self, n_in, nh, n_out):

self._modules = {}

self.l1 = nn.Linear(n_in,nh)

self.l2 = nn.Linear(nh,n_out)

def __setattr__(self,k,v):

if not k.startswith("_"): self._modules[k] = v

super().__setattr__(k,v)

def __repr__(self): return f'{self._modules}'

def parameters(self):

for l in self._modules.values():

for p in l.parameters(): yield p

def __call__(self, x): return self.l2(F.relu(self.l1(x)))

def zero_grad(self):

for p in self.parameters():

p.grad.data.zero_()

def fit():

for epoch in range(epochs):

for i in range((n-1)//bs + 1):

start_i = i*bs

end_i = start_i+bs

xb = x_train[start_i:end_i]

yb = y_train[start_i:end_i]

loss = loss_func(model(xb), yb)

loss.backward()

with torch.no_grad():

for p in model.parameters(): p -= p.grad * lr

model.zero_grad()

A7.

#1

layers = [nn.Linear(m,nh), nn.ReLU(), nn.Linear(nh,10)]

class Model(nn.Module):

def __init__(self, layers):

super().__init__()

self.layers = layers

for i,l in enumerate(self.layers): self.add_module(f'layer_{i}', l)

def __call__(self, x):

for l in self.layers: x = l(x)

return x

model = Model(layers)

#2

class SequentialModel(nn.Module):

def __init__(self, layers):

super().__init__()

self.layers = nn.ModuleList(layers)

def __call__(self, x):

for l in self.layers: x = l(x)

return x

model = SequentialModel(layers)

#3

model = nn.Sequential(nn.Linear(m,nh), nn.ReLU(), nn.Linear(nh,10))

A8

# A8

class Optimizer():

def __init__(self, params, lr): self.params, self.lr = params, lr

def zero_grad(self):

for p in self.params:

p.grad.data.zero_()

def step(self):

with torch.no_grad():

for p in self.params:

p -= p.grad * self.lr

def fit():

for epoch in range(epochs):

for i in range((n-1)//bs + 1):

start_i = i*bs

end_i = start_i+bs

xb = x_train[start_i:end_i]

yb = y_train[start_i:end_i]

loss = loss_func(model(xb), yb)

loss.backward()

opt.step()

opt.zero_grad()

model = nn.Sequential(nn.Linear(x_train.shape[1], nh), nn.ReLU(), nn.Linear(nh, c))

# accuracy-before train

(model(x_train[:bs]).max(-1).indices == y_train[:bs]).sum()/ bs

opt = Optimizer(model.parameters(), 0.9)

fit()

# accuracy-after train

(model(x_train[:bs]).max(-1).indices == y_train[:bs]).sum()/ bs

A9

from torch import optim

def get_model(model_func, lr=0.9):

model = nn.Sequential(*model_func())

return model, optim.SGD(model.parameters(), lr=lr)

def get_layers():

return nn.Linear(x_train.shape[1], nh), nn.ReLU(), nn.Linear(nh, c)

model, opt = get_model(get_layers, 0.5)

print((model(x_train[:bs]).max(-1).indices == y_train[:bs]).sum()/ bs)

fit()

print((model(x_train[:bs]).max(-1).indices == y_train[:bs]).sum()/ bs)

A10

class Dataset():

def __init__(self, x, y): self.x, self.y = x, y

def __len__(self): return len(self.x)

def __getitem__(self, i): return self.x[i], self.y[i]

train_ds = Dataset(x_train, y_train)

for epoch in range(epochs):

for i in range((n-1)//bs +1):

xb, yb = train_ds[i*bs:(i+1)*bs]

pred = model(xb)

loss = loss_func(pred, yb)

loss.backward()

opt.step()

opt.zero_grad()

model = nn.Sequential(nn.Linear(x_train.shape[1], nh), nn.ReLU(), nn.Linear(nh, c))

loss,acc = loss_func(model(xb), yb), accuracy(model(xb), yb)

A11

class DataLoader():

def __init__(self, ds, bs): self.ds, self.bs = ds, bs

def __iter__(self):

for i in range(0, len(self.ds), self.bs): yield self.ds[i:i+self.bs]

loss_func = F.cross_entropy

model, opt = get_model(get_layers, lr = 0.001)

train_dl = DataLoader(train_ds, bs)

for epoch in range(epochs):

for xb, yb in train_dl:

pred = model(xb)

loss = loss_func(pred, yb)

opt.step()

opt.zero_grad()

A12

class Sampler():

def __init__(self, ds, bs, shuffle=False):

self.n, self.bs, self.shuffle = len(ds), bs, shuffle

def __iter__(self):

self.idxs = torch.randperm(self.n) if self.shuffle else torch.arange(self.n)

for i in range(0, self.n, self.bs): yield self.idxs[i:i+self.bs]

small_ds = Dataset(*train_ds[:50])

os = Sampler(small_ds, 10, True)

[o for o in os]

os = Sampler(small_ds, 10, True)

[o for o in os]

def collate(batch):

# ipdb.set_trace()

xs, ys = zip(*batch)

return torch.stack(xs), torch.stack(ys)

class DataLoader():

def __init__(self, ds, sampler, collate_fn = collate):

self.ds, self.sampler, self.collate_fn = ds, sampler, collate_fn

def __iter__(self):

# ipdb.set_trace()

for s in self.sampler: yield self.collate_fn([self.ds[i] for i in s])

train_samp = Sampler(small_ds, bs, shuffle=True)

train_dl = DataLoader(small_ds, sampler=train_samp, collate_fn=collate)

next(iter(train_dl))

# unpacking test list

test_list = [('x1', 'y1'), ('x2', 'y2'), ('x3', 'y2')]

list(zip(*test_list))

for i in zip(*test_list):

print(i)

break

A13

from torch.utils.data import DataLoader, SequentialSampler, RandomSampler

train_dl = DataLoader(train_ds, bs, sampler=RandomSampler(train_ds), collate_fn = collate)

valid_dl = DataLoader(valid_ds, bs, sampler=SequentialSampler(valid_ds), collate_fn = collate)

# or

train_dl = DataLoader(train_ds, bs, shuffle=True, drop_last=True)

valid_dl = DataLoader(valid_ds, bs, shuffle=False)

Part2 lesson 9 Note | fastai 2019 course -v3

Part2 lesson 9 Note | fastai 2019 course -v3