Note from Sylvain Gugger’s talk at a joint NYC AI & ML x NYC PyTorch on 03/20/2019. Video / Slide </br>

(*Sylvain’s talk starts at around 7m 30s)

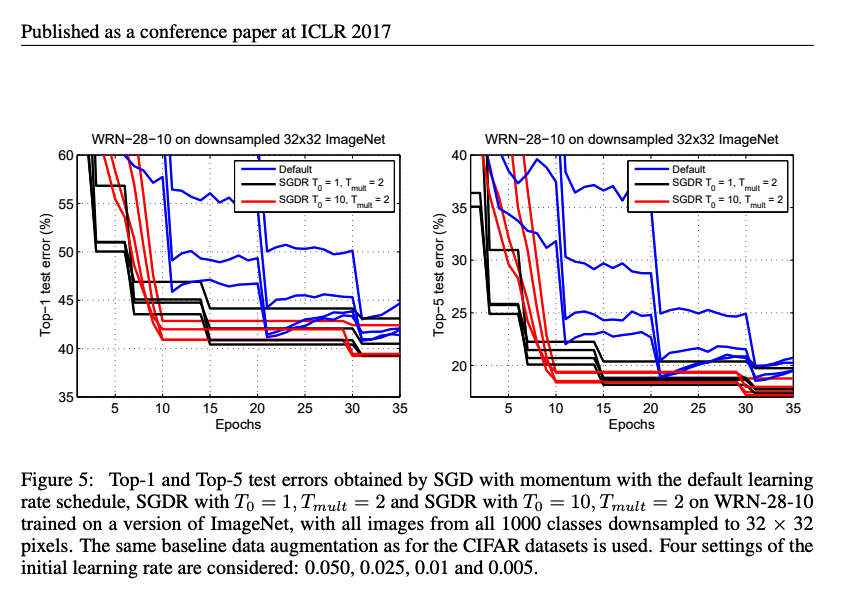

Why do we need to use callback?

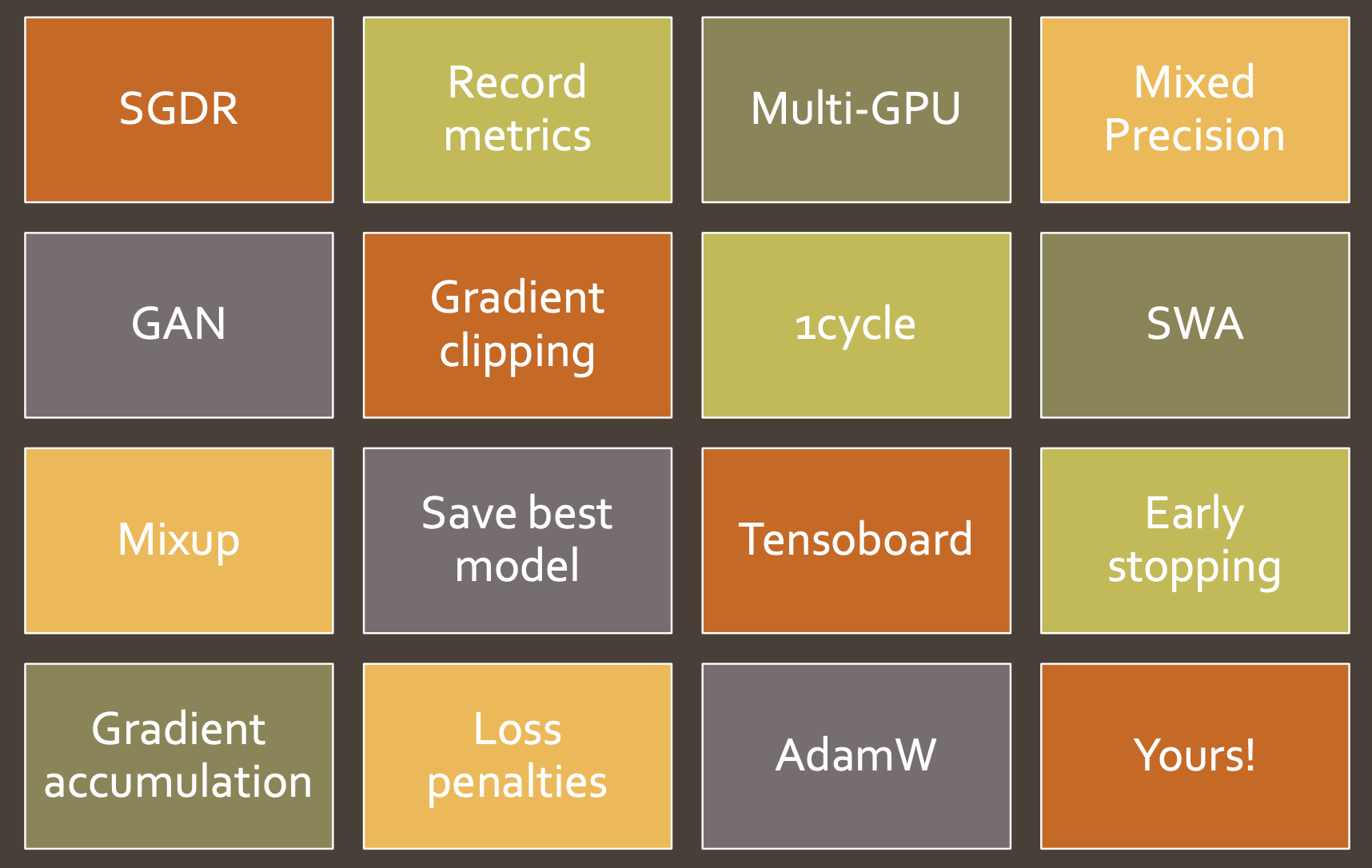

Problem: There are lots of tweaks we can add to models. And that makes your code messy.

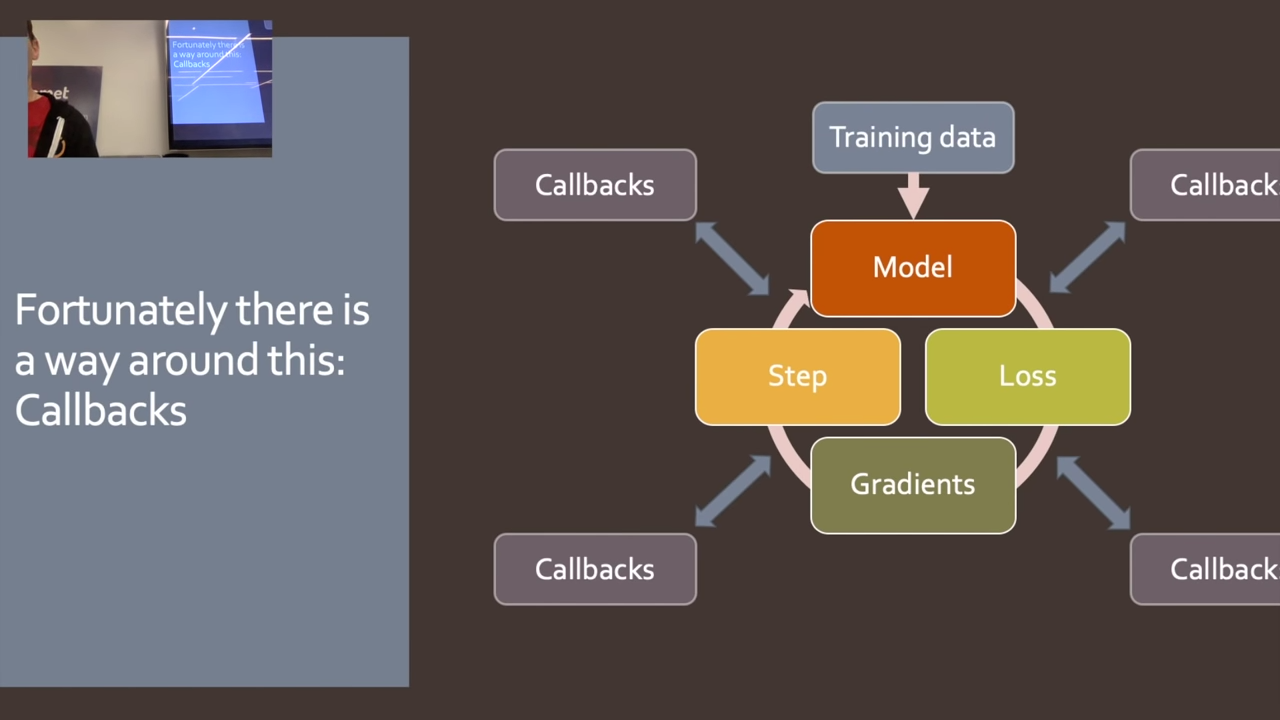

So, not editting the training code directly, we add callbacks between every step.

As a result, each callback could feed new strategy to training step, knowing what’s happening all the other callbacks and training steps. That’s what CallbackHandler does.

Then, you don’t have to re-write every training loop whenever something new tweaks came out.

Things callback can do: updates new value, flags and stop training.

How to build a flexible callback?

Each new tweaks can be written as one individual callback.

Case Study 1. Mixed Precision

If you can represent process as diagram, callbacks became easier.

Part2 lesson 10, 04 callbacks | fastai 2019 course -v3

Part2 lesson 10, 04 callbacks | fastai 2019 course -v3